InDeep Cum Laude Doctorate and Consortium Workshop

Our InDeep PhD candidate Gabriele Sarti successfully defended his dissertation, From Insights to Impact: Actionable Interpretability for Neural Machine Translation, on 11 December 2025 at the University of Groningen. Gabriele is the first PhD candidate within InDeep to complete and defend his doctorate, and he did so with outstanding expertise and enthusiasm, earning the distinction of cum laude. We warmly congratulate Dr. Gabriele Sarti and wish him every success in his future career.

You can read his dissertation here.

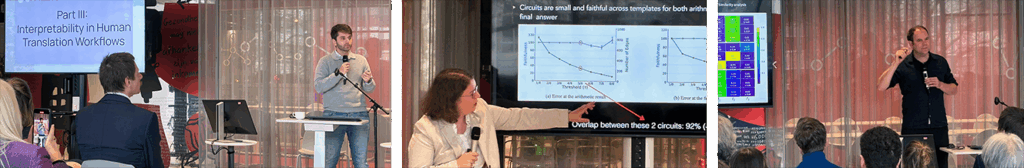

To mark this occasion, we hosted an InDeep Consortium Workshop prior to the defense. The workshop featured presentations by Gabriele, project leader Jelle Zuidema, as well as invited talks by Barbara Plank (LMU Munich), Ivan Titov (University of Amsterdam/University of Edinburgh), and Eva Vanmassenhove (Tilburg University). Following the defense, the celebration continued with a poster session and drinks.

Eva Vanmassenhove Losing our tail, again: On (Un)natural Selection and Language Technologies

Ivan Titov Why Do Interpretability Research? From Frustration to Actionability

Gabriele Sarti From Insight to Impact: Actionable Interpretability for Neural Machine

Barbara Plank From Arithmetic Validation Gaps to Explanation Variation

Willem Zuidema Mechanistic Interpretability of Time Series Transformers (and its Relevance for Language and Reasoning)

InDeep Masterclass at Deloitte

In December 2025 InDeep presented their Interpreting and Understanding LLMs and Other Deep Learning Models Masterclass at Deloitte Amsterdam. This masterclass offered an in-depth exploration of explainability and interpretability in large language models and other deep learning architectures. Featuring a series of expert-led presentations and an interactive hands-on tutorial, the event combined theoretical perspectives with practical methods for understanding how modern AI systems make decisions. Speakers addressed key challenges in explainable AI, including the trade-offs between faithfulness and comprehensibility of explanations, the limits of existing XAI techniques, and emerging approaches such as mechanistic interpretability, attribution methods, and behavioral evaluation through challenge sets. By bringing together academic expertise and applied discussion, the masterclass equipped participants with a deeper understanding of the inner workings, limitations, and evaluation of generative AI systems.

Speakers:

– Gabriele Sarti (RUG Groningen) – Interpreting Large Language Models

– Jelle Zuidema (UvA) – Too Much Information: How do we generate comprehensible and Faithful explanations when the input or output is…just a bit much?

– Antske Fokkens (VU) – Explanatory Evaluation and Robustness

October Workshop: How Computing is Changing the World

Workshop: “How Computing is Changing the World: Exploring Synergies and Challenges of AI and Quantum Technologies for Society”

In October 2025, the QISS and InDeep research groups met at the Sustainalab (Matrix One) in Amsterdam to explore in a joint workshop the synergies and challenges of AI and quantum technologies. As both fields advance rapidly, the workshop focused on their technological progress as well as their ethical, legal, and societal implications.

The morning session addressed technological perspectives. After a welcome, InDeep project leader Jelle Zuidema outlined the current state of AI, highlighting the black-box problem. Mehrnoosh Sadrzadeh (UCL) and Martha Lewis (UvA) followed with quantum-inspired approaches to modelling language and vision in AI. Ronald de Wolf (CWI & UvA, QuSoft) concluded with an overview of quantum computing, linking it to Zuidema’s AI analysis. A panel discussion with Christian Schaffner (UvA, QuSoft) and chaired by Joris van Hoboken (UvA) explored common challenges, research stages, and opportunities across the two domains.

The afternoon session focused on societal themes. Evert van Nieuwenburg (Leiden University) opened with a presentation on how quantum games, such as Quantum Tic-Tac-Toe, can help people learn quantum principles. Eline de Jong (UvA) shared five lessons for embedding new technologies in society, suggesting they apply to quantum tech as well. Angela van Sprang (UvA, ILLC) presented concept-bottleneck models as tools for improved AI oversight, and Matteo Fabbri (UvA) argued for proactive standards for recommender systems and other emerging technologies. In the panel discussion, led by Zuidema, participants from both AI and quantum backgrounds reflected on responsible innovation and the risks and opportunities of uncertain technological futures.

The workshop offered an engaging exchange at the intersection of technology, ethics, and society. Participants valued the lively discussions and expressed enthusiasm for organising a follow-up event in 2026, potentially establishing a recurring series on the intersections of AI and quantum technologies.

The Quantum Impact on Societal Security (QISS) consortium is based at the University of Amsterdam and analyses the ethical, legal and societal impact of the upcoming society-wide transition to quantum-safe cryptography. The consortium’s objective is to contribute to the creation of a Dutch ecosystem where quantum-safe cryptography can thrive, and mobilize this ecosystem to align technological applications with ethical, legal, and social values.

This workshop is made possible with funding from the Quantum Delta Netherlands growthfund program.

InDeep at Interspeech 2025

Many InDeep researchers presented at the Interspeech 2025 conference in Rotterdam, one of the largest and most prominent conferences on speech and speech technology world-wide. Therefore, we also organized a social event including drinks and snacks.

This was InDeep at Interspeech 2025:

Sunday 17 August

– Speech Science Festival 10.00-17.30. InDeep provided a demonstration of emotion-decoding and removal. There were many other demonstrations for a broad audience.

– Tutorial, 15.30-18.30: InDeep members organized the tutorial Interpretability Techniques for Speech Model.

– InDeep Social, 19.00: InDeep held a social event with drinks and snacks in the Stuurboord bar at the Foodhallen.

Monday 18 August

– Special Session on Interpretability in Audio and Speech Technology, 11:00-13:00. Session with three papers by InDeep authors:

+ On the reliability of feature attribution methods for speech classification.

+ Word stress in self-supervised speech models: A cross-linguistic comparison.

+ What do self-supervised speech models know about Dutch? Analyzing advantages of language-specific pre-training.

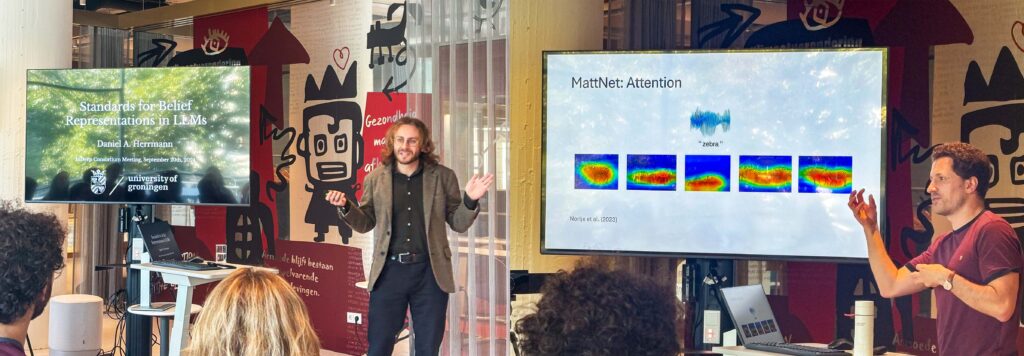

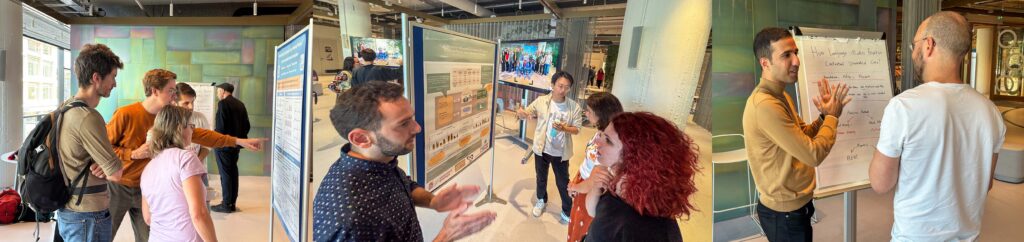

Consortium Meeting Groningen

On Friday 20 September 2024 InDeep met in the Groningen House of Connections for the next Consortium Meeting. In the morning RUG researchers Daniel Herrman (Philosophy of AI) and Yevgen Matusevych (Cognitive Plausibility of Modern LMs) gave illuminating talks on standards for belief representations in LLMs and bias in visually grounded speech models.

In the afternoon highlights from the various InDeep academic partners were shared, there was a poster session by researchers on their latest research results, as well as fruitful discussions on the future of interpretability methods in language, speech and music models and future directions of the InDeep project.

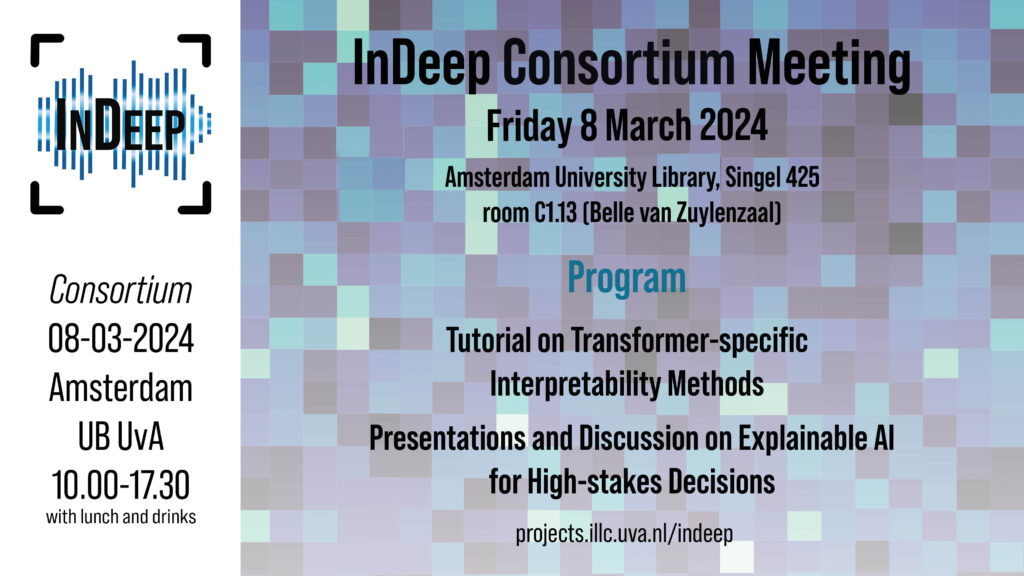

Consortium Meeting Amsterdam

On Friday 8 March 2024 InDeep had a Consortium meeting in the Amsterdam University Library. It was a day with both talks on recent progress in research, a tutorial, and lots of opportunity to discuss the strengths and weaknesses of interpretability techniques in text, translation, speech and music.

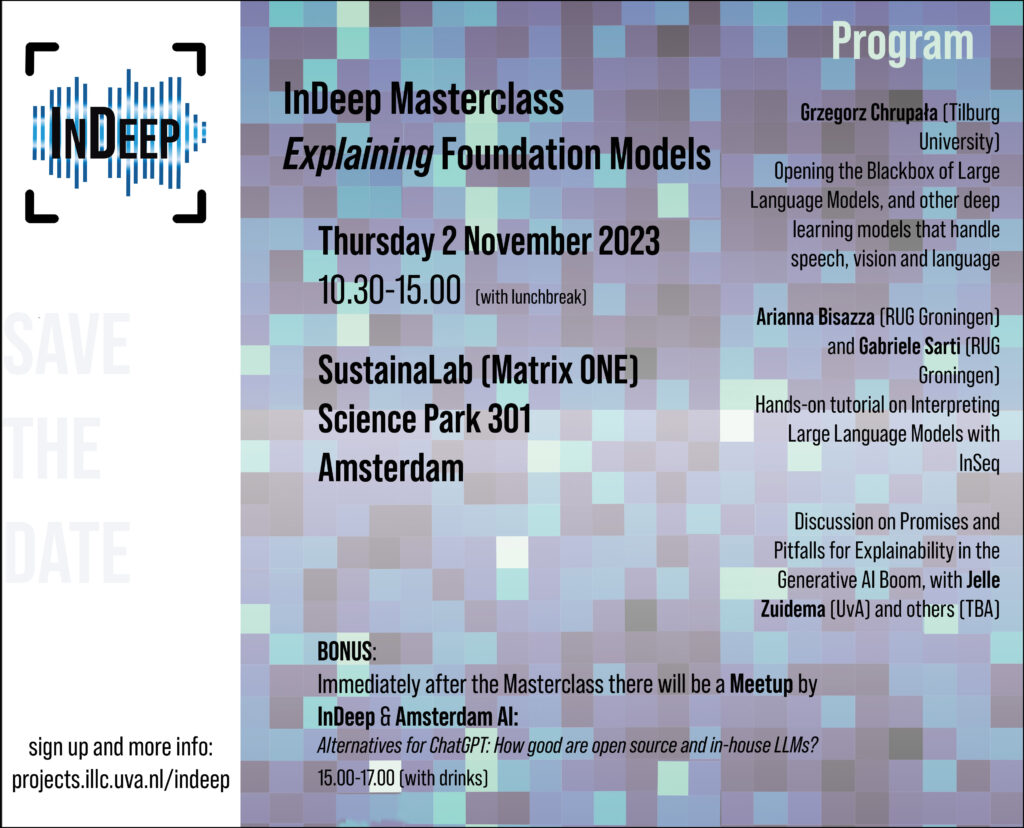

InDeep Masterclass: Explaining Foundation Models

On Thursday 2 November 2023, InDeep organized a Masterclass on Explaining Foundation Models. The event featured some inspiring talks, hands-on experience with explainability techniques for Large Language Models, and the opportunity to share insights and experiences. After the Masterclass there was a Meetup organized by Indeep and Amsterdam Al, on Alternatives for ChatGPT: How good are open source and in-house LLMs? To learn more about this event, you can download here an overview of the presentations and slides.