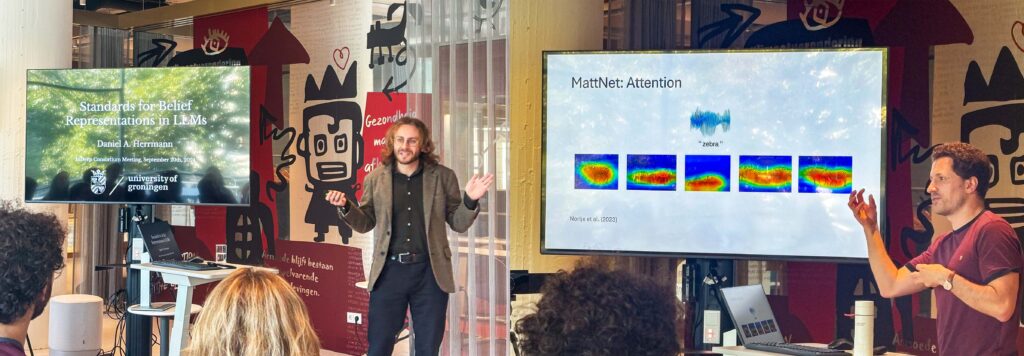

Consortium Meeting Groningen

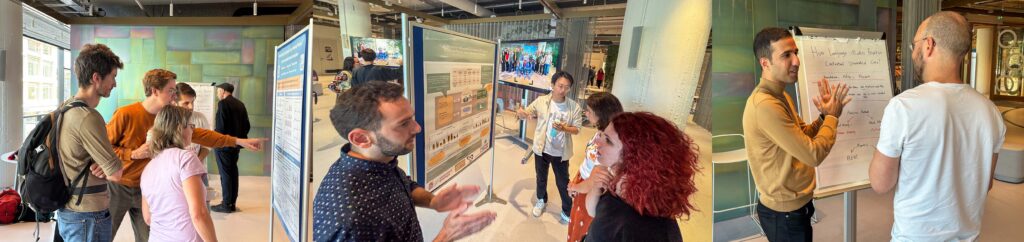

On Friday September 20th InDeep met in the Groningen House of Connections for the next Consortium Meeting. In the morning RUG researchers Daniel Herrman (Philosophy of AI) and Yevgen Matusevych (Cognitive Plausibility of Modern LMs) gave illuminating talks on standards for belief representations in LLMs and bias in visually grounded speech models.

In the afternoon highlights from the various InDeep academic partners were shared, there was a poster session by researchers on their latest research results, as well as fruitful discussions on the future of interpretability methods in language, speech and music models and future directions of the InDeep project.

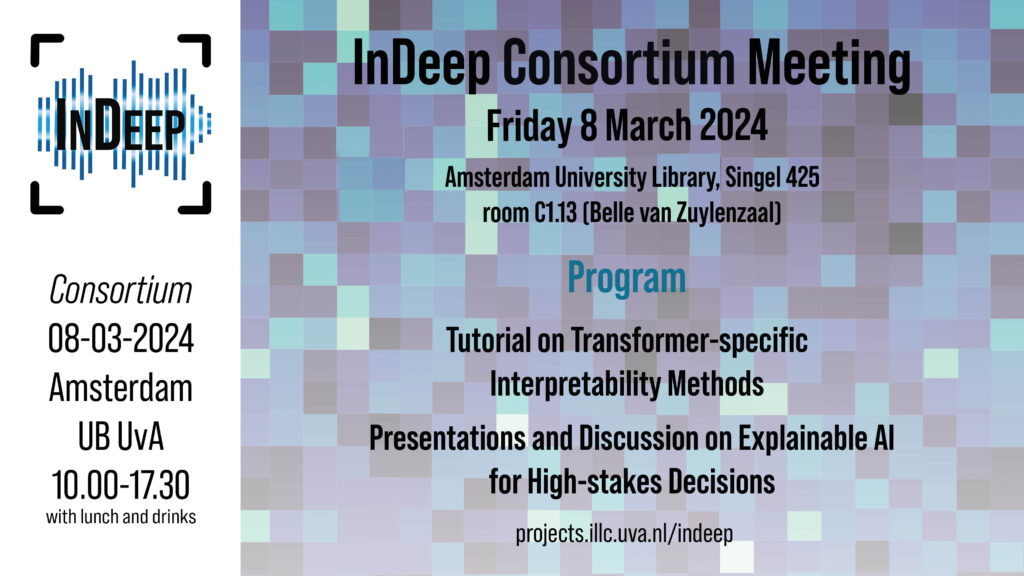

Consortium Meeting Amsterdam

On Friday March 8th InDeep had a Consortium meeting in the Amsterdam University Library. It was a day with both talks on recent progress in research, a tutorial, and lots of opportunity to discuss the strengths and weaknesses of interpretability techniques in text, translation, speech and music.

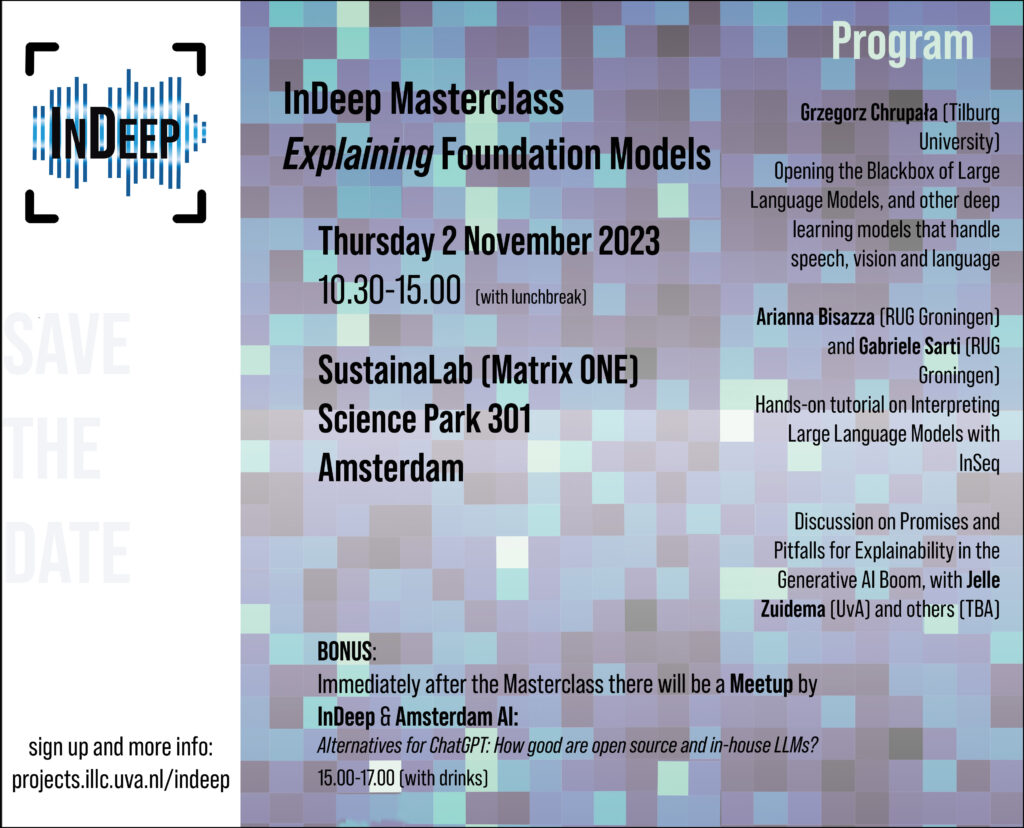

InDeep Masterclass: Explaining Foundation Models

On Thursday 2 November 2023, InDeep organized a Masterclass on Explaining Foundation Models. The event featured some inspiring talks, hands-on experience with explainability techniques for Large Language Models, and the opportunity to share insights and experiences. After the Masterclass there was a Meetup organized by Indeep and Amsterdam Al, on Alternatives for ChatGPT: How good are open source and in-house LLMs? To learn more about this event, you can download here an overview of the presentations and slides.