Six videos that explain and explore

how to open the Black Box

of Large Language Models

With the enormous impact that Artificial Intelligence models have in science, technology and society, many people are looking for ways to make these models more transparent to better understand what is happening on the inside of these models. In the field of Explainable AI researchers have come up with many different tools to help achieve these goals. These tools are called ‘interpretability methods’. Understanding the limits of interpretability methods, as well as finding ways to understand more of what is happening inside the black box of Transformer models is therefore crucially important, for both engineers and policy makers.

In this video series made by the InDeep research group and presented by our (affiliated) researchers we will answer why Interpretability is important in the Age of LLMs, explain the behaviour of neural models, demonstrate why it is crucial to track how Transformers mix contextual information, show how to best measure context-mixing in Transformers, what a circuit is, and how to use circuits to reverse-engineer model mechanisms.

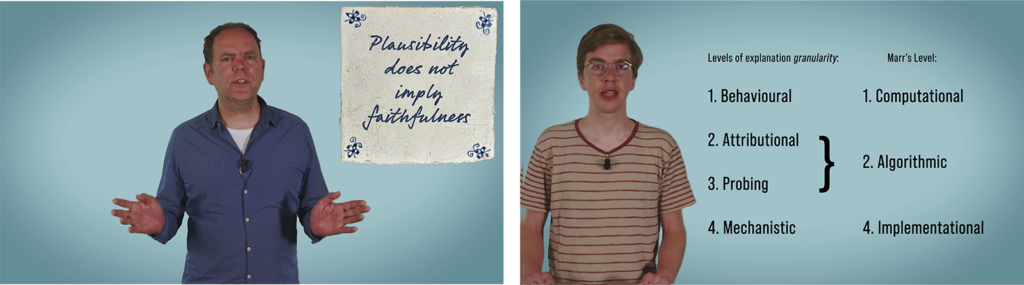

In the first video of this series we will explore the state of the field and give an introduction to how to begin the extremely challenging task to explain the behaviour of large-scale neural networks. In our second video we look at how we can leverage insights from the field of cognitive science to help us interpret large-scale neural networks. However, as this video also shows, merely employing general-purpose interpretability methods may not lead to reliable findings. In order to resolve this lack, we may need to interpret models at a more fine-grained level.

1- Why Interpretability is important in the Age of LLMs Dr Jelle Zuidema, Associate professor, University of Amsterdam

2- How can we explain the behaviour of neural models? Jaap Jumelet, PhD candidate, University of Amsterdam

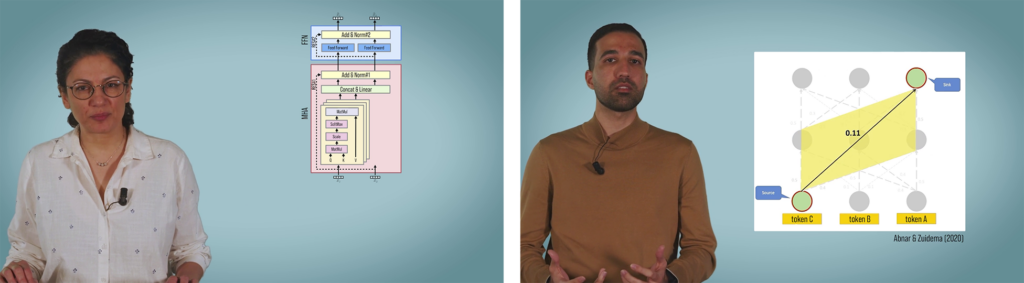

In the next two videos we dive deeper into the inner working of interpretability methods and demonstrate various methods that have been proposed to create explanations that are more faithful to a model’s actual behaviour. The third video introduces context mixing and discuss its importance for understanding how Transformers process data and build contextualized representations. In video four we will learn about methods to measure context-mixing in Transformers but also that some of these methods are not a well-equipped as they first seem.

3- Why it is crucial to track how Transformers mix contextual information Dr Afra Alishahi, Associate professor, Tilburg University

4- How to best measure context-mixing in Transformers Hosein Mohebbi, PhD candidate, Tilburg University

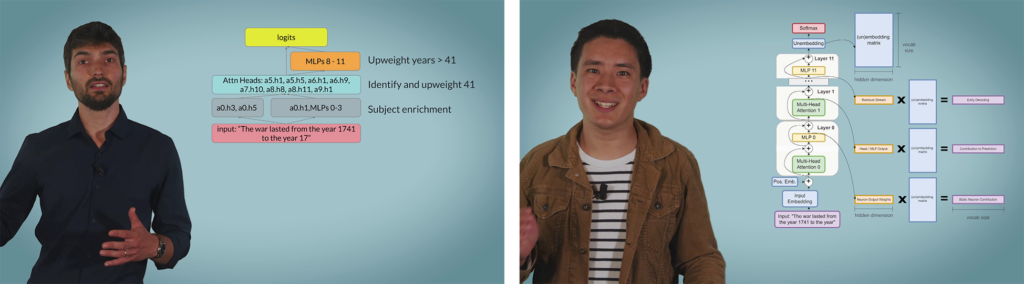

In the final two videos of this series, we’ll explore other types of Transformer-specific methods that involve the model’s decision and find subnetworks in the model that are responsible for performing specific subtasks. In video five we will introduce circuits, a new framework for providing low-level, algorithmic explanations of language model behavior. But how do you find a circuit? In the sixth video of this series, we’ll explain the techniques used to uncover circuits for any task.

5- What is a circuit, and how does it explain LLM behavior? Dr Sandro Pezzelle, Assistant professor, University of Amsterdam

6- How to reverse-engineer model mechanisms by finding circuits Michael Hanna, PhD candidate, University of Amsterdam

We hope you will enjoy and learn from these first set of videos. We hope to make more video’s as there’s plenty more in the world of interpretability and circuits beyond this.