Join our InDeep Newsletter where we will periodically give you an update of current news, articles and upcoming events related to the project. Read our most recent newsletter here.

Our InDeep PhD candidate Gabriele Sarti successfully defended his dissertation, From Insights to Impact: Actionable Interpretability for Neural Machine Translation, on 11 December 2025 at the University of Groningen. Gabriele is the first PhD candidate within InDeep to complete and defend his doctorate, and he did so with outstanding expertise and enthusiasm, earning the distinction of cum laude. We warmly congratulate Dr. Gabriele Sarti and wish him every success in his future career.

You can read his dissertation here.

InDeep’s Fourth Year

In September 2025 Indeep turned 4! The fourth year has been fantastically productive. Unfortunately, however, reaching our 4th birthday also means that the two first InDeep PhD students – Gabriele Sarti and Hosein Mohebbi – have reached the end of their contracts. Gabriele will defend his PhD thesis on December 11th, 2025 at the Academiegebouw in Groningen. Hosein’s defense still needs to be scheduled, but is coming up as well.

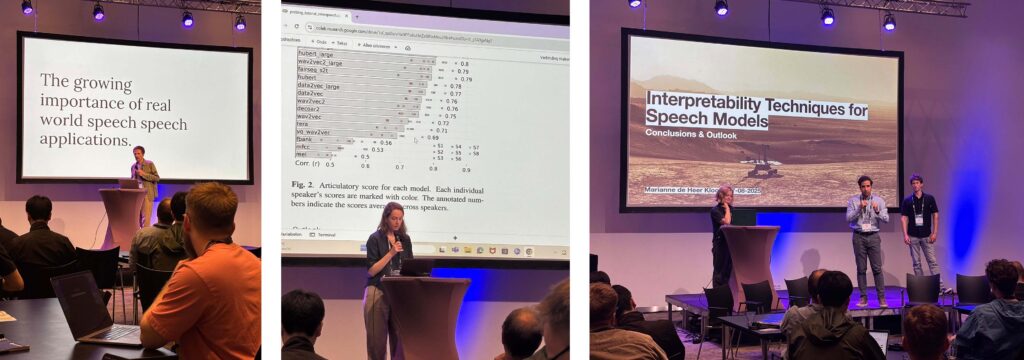

One of the highlights of the fourth year has been the tutorial on Interpretability Techniques for Speech Models that a large team of InDeep researchers prepared and delivered at Interspeech’25 in Rotterdam.

The materials for this tutorial are still available here; they cover probing for phone identity, stress patterns, syllable type and many other aspects of linguistic structure; feature attribution and representational similarity analysis as practical tools to understand better how, e.g., speech recognition systems arrive at their output; and value zeroing and interchange interventions as examples of causal interventions that go beyond just correlational evidence.

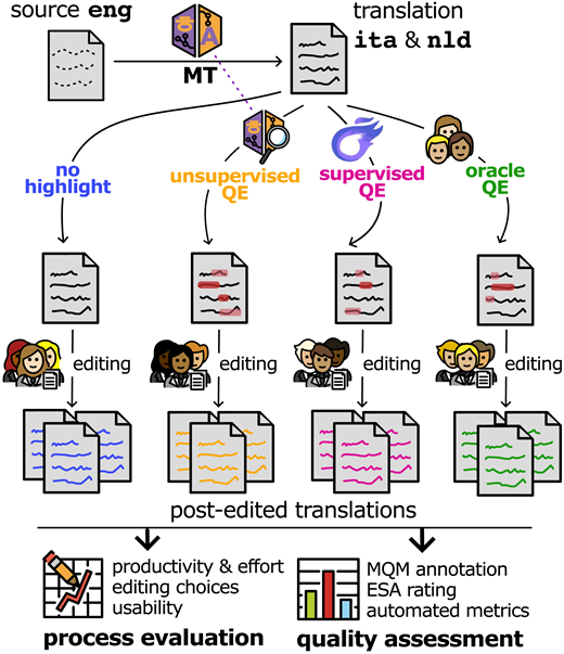

Another highlight of the fourth year is the successful completion and publication of the QE4PE project: Quality Estimation for Post Editing. This project addresses a major issue for the practical use of machine translation technology, that in 2025 has become very useful but is still far from error free: where do you focus human effort to check and improve translation output? The results from the study are nuanced: even the currently best currently available automatic tools for highlighting words that the translation technology is unsure about, do not necessarily help human translators to become more productive. For obtaining these results, the project developed datasets and tools that we will build on in future projects, and involved 42 professional post-editors across two translation direction. It was published in the field’s prime venue, Transactions of the ACL. Slator wrote a piece on the QE4PE findings.

Explainability and Interpretability Worldwide 2025

While the InDeep project steadily progresses, developments in AI wordwide have continued at breakneck speed. That includes developments in Mechanistic Interpretability (MechInterp), that has created much excitement, and all the major frontier labs in (generative) AI now have dedicated MechInterp teams, including at Anthropic, at OpenAI and at Google DeepMind. The mechinterp community has published hundreds of papers in the past year. One result from Anthropic’s team has garnered considerable interest: in experiments with the Claude Haiku 3.5 the team showed the model does nontrivial planning ahead when asked to generate poetry that rhymes. The finding involved a popular interpretability techniques, Sparse Autoencoders, and causal interventions. Although it is not of immediate practical use, it has started playing a big role in the scientific understanding of and debates about how far the latest generation of LLMs moves beyond “mere” next word prediction.

Another success-story of the interpretability field is a project from colleagues at the University of California (Berkeley and San Francisco) where they used the internal states of a state-of-the-art Speech Model to help patients with brain damage to speak again. The paper on the project, “A streaming brain-to-voice neuroprosthesis to restore naturalistic communication” was published in Nature in March 2025. The paper crowns a series of interpretability papers from the Berkeley group of Amunchipalli, with PhD student Cheol Jun Cho, where they discover using diagnostic probing techniques that the internal states of big Speech Models have learned to represent the movements of the mouth, tongue and vocal tract (“articulatory trajectories”), just from being exposed to hundreds of thousands of hours of spoken language. There is a video showing the neuroprothesis in action with a real patient on YouTube. The work of Cho and Amunchipalli was also featured in InDeep’s Interpretability Techniques for Speech Models tutorial discussed above.

InDeep Principal Investigator Iris Henrickx presented with master student Daan Brugmans a new speech-based Demo at the Speech Science Festival – Interspeech2025, August 17, Rotterdam. Our demo had the form of a game where the user talks with the system in Dutch or English and the system tried to guess the emotions of the user on the basis of the speech signal.

The demo showcased what the state of the art is in speech technology, and which type of information is still very hard for current systems to make interpretable for users. Many researchers from all over the world took part in the Speech Science Festival and the event attracted a few hundred visitors, with over 50 participants to our InDeep Demo.

Our own PhD student Marcel Vélez Vásquez co-organised the AI Song Contest 2025 at the Melkweg in Amsterdam, with support from John Ashley Burgoyne collecting and verifying jury and public voting results. After an electrifying award show featuring performances from all ten finalists, GENEALOGY emerged as this year’s winner, claiming both the public vote and the overall award. Visit aisongcontest.com to watch the full awards show and to learn more about all of the contest entries.

Our InDeep member Afra Alishahi has been appointed Professor of Computational Linguistics within the Department of Cognitive Science and Artificial Intelligence of Tilburg School of Humanities and Digital Sciences. Congratulations Afra! Read more here.

Exciting news! InDeep has made a video series that explains and explores how to open the Black Box of Large Language Models. We hope to make more video’s as there’s plenty more in the world of interpretability and circuits beyond this. Go to our video series page to view them.

The paper “ChatGPT: Five priorities for research” from InDeep member Willem Zuidema and colleagues, published in Nature, was listed as #15 in the most cited papers in all of AI in 2023, in an analysis by Zeta-Alpha.

InDeep principal investigator Arianna Bisazza was recently awarded a Vidi, a prestigious personal grant funded by NWO. The project is starting in February 2024 and aims to improve language modeling for low-resource languages, taking inspiration from child language acquisition insights.

Arianna Bisazza, together with Jirui Qi and Raquel Fernandez, was awarded an Outstanding Paper Award at 2023 EMNLP and a Best Data Award at the GenBench Workshop 2023 for the paper Cross-Lingual Consistency of Factual Knowledge in Multilingual Language Models

And, also at the EMNLP conference, InDeep PhD candidate Hossein Mohebbi received together with his supervisors Grzegorz Chrupała, Jelle Zuidema, and Afra Alishahi the Outstanding Paper Award for Homophone Disambiguation Reveals Patterns of Context Mixing in Speech Transformers.

Read the award winning paper here.

Following the launch and media storm on ChatGPT , several InDeep members worked hard to inform the general public about developments in AI and NLP.

InDeep members Arianna Bisazza and Gabriele Sarti were among the presenters in the event “An Evening with ChatGPT” by the GroNLP group, an open event about risks and opportunities of new language technologies. You can watch the presentations of Arianna Bisazza and Gabriele Sarti, or go the full event and see the slides.

And project leader Jelle Zuidema appeared in various Dutch popular media to comment on developments, including the tv program Nieuwsuur, and national newspapers NRC and Volkskrant, and in ‘An Afternoon with ChatGPT’ at the University of Amsterdam, modelled after the Groningen initiative.

Computer says no, but why? NWO published in February 2022 a feature on the InDeep project in their magazine, with quotes from Jelle Zuidema, Ashley Burgoyne and Jurjen Wagemakers (Floodtags).